Translator的問題,我們搜遍了碩博士論文和台灣出版的書籍,推薦Runge, Laura L.寫的 Quantitative Literary Analysis of Aphra Behn’’s Works 和Yun, Ko-Eun的 Table for One: Stories都 可以從中找到所需的評價。

另外網站Longman translator也說明:Use LDOCE Online Translate to quickly translate words and sentences in English, Spanish, Japanese, Chinese and Korean. Instant translation with definitions ...

這兩本書分別來自 和所出版 。

國立臺北科技大學 電子工程系 黃士嘉所指導 陳哲偉的 基於原生架構設計與實現應用WebRTC的即時多媒體通訊 (2021),提出Translator關鍵因素是什麼,來自於WebRTC、多媒體即時通訊、原生架構。

而第二篇論文國立中正大學 資訊工程研究所 陳鵬升所指導 鄭禔陽的 基於機器學習技術之別名分析 (2021),提出因為有 別名、自然語言處理、神經網路的重點而找出了 Translator的解答。

最後網站American Translators Association則補充:Professional translators and interpreters connect us to our world. When you care about your customers and quality, you need an ATA member.

Quantitative Literary Analysis of Aphra Behn’’s Works

為了解決Translator 的問題,作者Runge, Laura L. 這樣論述:

Aphra Behn (1640-1689), prolific and popular playwright, poet, novelist, and translator, has a fascinating and extensive corpus of literature that plays a key role in literary history. The book offers an analysis of all of Behn’s literary output. It examines the author’s use of words in terms of

frequencies and distributions, and stacks the oeuvre in order to read Behn’s word usage synchronically. This experimental analysis of Behn’s literary corpus aims to provide a statistical overview of Behn’s writing and a study of her works according to the logic of the concordance. The analysis demon

strates the interpretive potential of digital corpus work, and it provides a fascinating reading of synchronic patterns in Behn’s writing. The book aims to augment the practice of close-reading by facilitating the rapid moves from full corpus to unique subsets, individual texts, and specific passage

s. It facilitates the connections among works that share verbal structures that would otherwise not register in diachronic reading. Each chapter focuses on one type of writing: poetry, drama, and prose. The chapters begin with an overview of the documents that make up the corpus for the genre (e.g.

the 80 published poems attributed to Behn in her lifetime; 18 plays and 12 prose works). A section on statistical commonplaces follows (length of texts, vocabulary density, and most frequent words). Interesting textual examples are explored in more detail for provocative close readings. The statisti

cs then are contextualized with the general language reference corpus for a discussion of keywords. Each chapter features a unique comparative study that illustrates Behn in a specific context. Each chapter analyzes a specific genre and comparative statistical experiments within the genre. The concl

usion compares all three genres to provide a study of Aphra Behn’s oeuvre as a whole. The discussion is focused through the lens of Behn’s most remarkable words. The keywords for her oeuvre as compared to the literary works in the general language reference (fifteen texts) when the proper names and

stage directions are removed to provide an index of Behn’s characteristic themes and qualities: oh, young, lover, love, marry, charming, heart, gay, soft, goes. Each of these words opens a window on her corpus as a whole. A unique case study of a significant author using new literary methodologies,

this book provides an appealing snapshot of Behn’s whole career informed by deep knowledge of the Restoration era and developments in digital humanities and cultural analytics.

Translator進入發燒排行的影片

日本と台湾を行き来しながら活動してきた TOTALFAT と Fire EX. に

この1年半の生活や音楽活動、音楽との向き合い方など、熱い思いを聞いてみました!

Guest Arists Playlist ➫ https://spoti.fi/3eYW3YS

⬇︎ CLICK HERE FOR INFO ⬇︎ 次回 👉👉👉 2021/10/07(THU)公開予定

[[ Japan × Taiwan Musician Special Talk 関連記事 ]]

https://our-favorite-city.bitfan.id/contents/33603 (日本語)

Coming Soon(繁体中国語)

[[ Special Talk Monthly Guest ]]

🎸TOTALFAT

Gt.&Vo. Jose

Ba.&Vo. Shun

Drs.&Cho. Bunta

https://fc.totalfat.net/

https://www.instagram.com/totalfat_japan/

https://twitter.com/totalfat_crew

https://www.youtube.com/channel/UCsh7mxvKUN3yrWCiLJhsjkw/featured

https://open.spotify.com/artist/2Bxu9stwgeIGzYeTNRicKE

🎸滅火器 Fire EX.

Vo. 楊大正 Sam

Gt. 鄭宇辰 ORio

Ba. 陳敬元 JC

Drs. 柯光 KG

https://www.fireex.com.tw/

https://www.instagram.com/fireex_official/

https://www.facebook.com/FireEX/

https://www.youtube.com/c/%E6%BB%85%E7%81%AB%E5%99%A8FireEX

https://open.spotify.com/artist/7qBIgabdHdcr6NLujDxWAU

[[ Special Thanks ]]

Our Favorite City - Powered by Bitfan

https://our-favorite-city.bitfan.id/

https://www.instagram.com/ourfavoritecity/

https://www.facebook.com/OurFavoriteCity/

https://twitter.com/OurFavoriteCity

吹音樂 BLOW

https://blow.streetvoice.com/

Taiwan Beats

https://ja.taiwanbeats.tw/

https://www.instagram.com/taiwan_beats_jp/

https://twitter.com/TaiwanBeatsJP

------------------------------------------------------------------------------

☺︎ SAYULOG さゆログ ☺︎

------------------------------------------------------------------------------

Instagram ➫

https://www.instagram.com/sayulog_official/

Facebook ➫

https://www.facebook.com/sayulog/

Twitter ➫

https://twitter.com/sayulogofficial/

note ➫

https://note.com/sayulog

Pinterest ➫

https://www.pinterest.jp/sayulog_official/_created/

MORE INFO

https://www.sayulog.net/

https://linktr.ee/sayulog_official

📩 Business Inquiry(日本語 / 中文 / English / Türkçe OK!)

[email protected]

------------------------------------------------------------------------------

☺︎ Music

YouTube Audio Library

☺︎ Logo Design

Ash

http://hyshung27.byethost8.com/

☺︎ YouTube Cover Design & Title Design & Illustration

Mai Sajiki

https://un-mouton.com/

☺︎ Translator

Keita 林嘉慶(Traditional Chinese)

https://www.instagram.com/mr.hayashi_/

#SAYUNOTE #OurFavoriteCity #ニッポンタイワンオンガクカクメイ #台日音樂藝人黑白配 #TOTALFAT #滅火器 #FireEX

基於原生架構設計與實現應用WebRTC的即時多媒體通訊

為了解決Translator 的問題,作者陳哲偉 這樣論述:

自2020年起,全世界都飽受COVID-19所苦,新冠肺炎的高度傳染性對於現今社會造成極大的衝擊。公司員工每週定期面對面匯報工作進度的晨會被迫中止,遠端通訊媒體如雨後春筍般地湧現, Google Meet、Microsoft Teams 和 Zoom 等各種應用了 WebRTC 的通信應用如雨後春筍般湧現,皆旨在為疫情時代找出讓生活重回正軌的方法。WebRTC,全稱為Web Real-Time Connection,是一種藉由點對點的UDP來傳送串流資料的架構,它能夠通過應用程序介面來為行動裝置提供即時通信,並以具有相當低的延遲而聞名。而以原生的架構實現則可以讓其對裝置的控制力最大化。本論文

提出一種基於原生架構設計與實現應用WebRTC的即時多媒體通訊,冀望能為後疫情時代重建中的秩序盡一份心力。

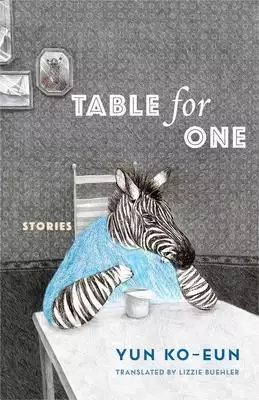

Table for One: Stories

為了解決Translator 的問題,作者Yun, Ko-Eun 這樣論述:

An office worker who has no one to eat lunch with enrolls in a course that builds confidence about eating alone. A man with a pathological fear of bedbugs offers up his body to save his building from infestation. A time capsule in Seoul is dug up hundreds of years before it was intended to be uneart

hed. A vending machine repairman finds himself trapped in a shrinking motel during a never-ending snowstorm. In these and other indelible short stories, contemporary South Korean author Yun Ko-eun conjures up slightly off-kilter worlds tucked away in the corners of everyday life. Her fiction is burs

ting with images that toe the line between realism and the fantastic. Throughout Table for One, comedy and an element of the surreal are interwoven with the hopelessness and loneliness that pervades the protagonists' decidedly mundane lives. Yun's stories focus on solitary city dwellers, and her ecc

entric, often dreamlike humor highlights their sense of isolation. Mixing quirky and melancholy commentary on densely packed urban life, she calls attention to the toll of rapid industrialization and the displacement of traditional culture. Acquainting the English-speaking audience with one of South

Korea's breakout young writers, Table for One presents a parade of misfortunes that speak to all readers in their unconventional universality. Yun Ko-eun is the award-winning author of three novels and three short story collections. Born in 1980, she lives in Seoul. Lizzie Buehler is a translator

from Korean and an MFA student in literary translation at the University of Iowa.

基於機器學習技術之別名分析

為了解決Translator 的問題,作者鄭禔陽 這樣論述:

別名分析是編譯器中很重要的一部分,和程式碼後續的優化息息相關。目前大部分編譯器,如 GCC 或 LLVM 都是以基於規則的方式去進行別名分析。這種傳統的分析方法雖然擁有高度的準確度,但同時也相當耗時,特別是遇到大型程式的情況。於是本論文採用近年來較流行的自然語言處理相關的機器學習方法,嘗試去理解程式碼的「語意」,並直接分析程式碼本身。透過這種方法可以大幅減少計算量,藉此達到加速的效果。而且透過機器學習神經網路所得到的結果為一機率,相比傳統方法的分類,這種結果可以有更靈活的應用,例如在 Compiler speculation 中,可以更直接地計算期望值。透過實驗證明,和 LLVM 原始的分析

工具將比,本論文的模型預測準確率可達到 92.6%,並且有三倍的加速。

想知道Translator更多一定要看下面主題

Translator的網路口碑排行榜

-

#1.WordReference.com: English to French, Italian, German ...

Free online dictionaries - Spanish, French, Italian, German and more. Conjugations, audio pronunciations and forums for your questions. 於 www.wordreference.com -

#2.iTranslate: The Leading Translation and Dictionary App

Awesome and highly accurate translator! Vitór A. I love and rely on this app! Juha N. This app is of great help! Milan K. Slide 2 of 5. 於 itranslate.com -

#3.Longman translator

Use LDOCE Online Translate to quickly translate words and sentences in English, Spanish, Japanese, Chinese and Korean. Instant translation with definitions ... 於 www.ldoceonline.com -

#4.American Translators Association

Professional translators and interpreters connect us to our world. When you care about your customers and quality, you need an ATA member. 於 www.atanet.org -

#5.Nice Translator - The fast, easy online translator

Translate in real-time as you type! You can even translate into multiple languages at once. Used and loved by millions. 於 nicetranslator.com -

#6.TRANSLATOR.EU: Translate free and online

Translator eu is an online multilingual text and phrase translator that provides translations to 42 languages Its use is free of charge and does not. 於 www.translator.eu -

#7.Translate by Voice or Text in Real-Time with ... - Skype

Translate English to Spanish, French, and more. Get Skype Translator – a language translator that translates 10 spoken and 60 written languages in real ... 於 www.skype.com -

#8.Translator and Interpreter Training: Issues, Methods ... - 博客來

書名:Translator and Interpreter Training: Issues, Methods and Debates,語言:英文,ISBN:9780826498052,頁數:240,作者:Kearns, John (EDT), ... 於 www.books.com.tw -

#9.GTranslate - Website Translator: Translate Your Website

GTranslate is a website translator which can translate any website to any language automatically and make it available to the world! 於 gtranslate.io -

#10.Morse Code Translator

The translator can translate between Morse code and Latin, Hebrew, Arabic and Cyrillic alphabets. It can play, flash or vibrate the Morse code. 於 morsecode.world -

#11.Register as a translator

Join our translation company! Large volume of translation jobs,quick payments and professional project management. Complete your translator profile online ... 於 translated.com -

#12.百度翻译-200种语言互译、沟通全世界!

百度翻译提供即时免费200+语言翻译服务,拥有网页、APP、API产品,支持文本翻译、文档翻译、图片翻译等特色功能,满足用户查词翻译、文献翻译、合同翻译等需求, ... 於 fanyi.baidu.com -

#13.Products : Translator 7 : Overview - Chicken Systems

Translator ™ 7 - Professional Instrument Conversion Software ... Take any sample library and Translator™ 7 can convert all of its Programs, Instruments, or Samples ... 於 www.chickensys.com -

#14.Video Translator - Translate Videos Online - VEED.IO

Our video translator is completely online, no need to download software. Best of all, VEED is incredibly accurate in generating subtitles and translations. 於 www.veed.io -

#15.Dictionary-enhanced text translation - PONS

Use the free text translator by PONS! Available in 38 languages with 12 million headwords and phrases. 於 en.pons.com -

#16.Become a Human Translator | Stepes

Put your language skills to work, become a super-human translator or interpreter and earn money online. Our Marketplace is ideal for freelancers and remote ... 於 www.stepes.com -

#17.Google 翻譯

Google 提供的服務無須支付費用,可讓您即時翻譯英文和超過100 種其他語言的文字、詞組和網頁。 於 translate.google.com -

#18.What Is a Translator? Common Tasks, Types, & Skills | Upwork

Translators are responsible for translating written text from one language to another (usually a native language). They rely on advanced writing and translation ... 於 www.upwork.com -

#19.What does a translator do? - CareerExplorer

A translator working on his computer, converting the written word from one language to another. Translators aid communication by converting written information ... 於 www.careerexplorer.com -

#20.Presentation Translator for PowerPoint - Microsoft

As you speak, Presentation Translator displays subtitles directly on your PowerPoint presentation in any one of more than 60 supported text languages. This ... 於 www.microsoft.com -

#21.translator - 優惠推薦- 2023年3月| 蝦皮購物台灣

你想找的網路人氣推薦translator商品就在蝦皮購物!買translator立即上蝦皮台灣商品專區享超低折扣優惠與運費補助,搭配賣家評價安心網購超簡單! 於 shopee.tw -

#22.「translator」找工作職缺-2023年3月 - 104人力銀行

2023/3/2-804 個工作機會|In-House Translator【和康生物科技股份有限公司】、Japanese interpreter and translator 日語翻譯【17LIVE_藝啟股份有限 ... 於 www.104.com.tw -

#23.SYSTRAN Translate: Online document translator for business ...

Text translation documents translation into 50 languages. Secure and precise in the legal, medical, finance, domains. Recognized by the largest companies. 於 www.systran.net -

#24.Translate.com: Online Translator

Expert language solutions for any size of business. 25K+ professional translators. 90 language pairs. 24/7 online translation service. API. 於 www.translate.com -

#25.Translation Services by Professional Translators | Fiverr

Affordable Translation Services. Find a freelance translator for hire, outsource your translation project and get it quickly delivered remotely online. 於 www.fiverr.com -

#26.Bing Microsoft Translator - Translate from English to Arabic

Quickly translate words and phrases between English and over 100 languages. 於 www.bing.com -

#27.The World's Worst Translator | Alternatino - YouTube

A crime boss's translator proves he's not up to the task. About Alternatino: Alternatino is a sketch show that follows Arturo Castro (“Broad ... 於 www.youtube.com -

#28.Free Translation Online

... Online translates selected text, words, phrases between more than 104 languages using 3 translation providers Google, Microsoft Bing, Translator. 於 translation2.paralink.com -

#29.translator - Schema.org Property

Schema.org Property: translator - Organization or person who adapts a creative work to different languages, regional differences and technical requirements ... 於 schema.org -

#30.Translation portal: translation jobs, translation agencies ...

Portal for translators and translation agencies. Translation jobs. Database of freelance translators. Database of translation agencies. 於 www.translationdirectory.com -

#31.Analytics translator: The new must-have role | McKinsey

Translators then tap into their working knowledge of AI and analytics to convey these business goals to the data professionals who will create ... 於 www.mckinsey.com -

#32.Translators without Borders

Translators without Borders is a 501(c)(3) nonprofit helping people get vital information and be heard, whatever language they speak. 於 translatorswithoutborders.org -

#33.Translator | Figma Community

Translator instantly translates text in your Figma designs into other languages. Backed by the same engine that powers Google Translate, you can experiment ... 於 www.figma.com -

#34.Google Language Translator – WordPress plugin

Translate WordPress with Google Language Translator multilanguage plugin which allows to insert Google Translate widget anywhere on your website. 於 wordpress.org -

#35.GTC | Login

Welcome to Coursera's Global Translator Community. Help bring the world's best education to the world! Log In using your Coursera account. 於 translate-coursera.org -

#36.關於Translator的意思和用法的提問 - HiNative

Q: What does it mean by"a translator turns up in press photos between two world leaders lacking a common language"? Thx a lot 是什麼意思. 於 tw.hinative.com -

#37.Lecture Translator

follow live and pre-recorded lectures with computer generated transcripts and translations. Now Live. No running sessions available. My Lectures. 於 lecture-translator.kit.edu -

#38.Translations (Symfony Docs)

Forcing the Translator Locale; Extracting Translation Contents and ... (i.e. "messages") by wrapping them in calls to the Translator ("Translations"); ... 於 symfony.com -

#39.Translator - definition of translator by The Free Dictionary

trans·la·tor · 1. One that translates, especially: a. One employed to render written works into another language. b. A computer program or application that ... 於 www.thefreedictionary.com -

#40.translator中文(繁體)翻譯:劍橋詞典

translator 翻譯:(尤指從事筆譯的)譯者,翻譯家。了解更多。 於 dictionary.cambridge.org -

#41.Approved Translator List - ICBC

Please confirm the translator you choose is approved to translate in the direction you require. Not all ICBC approved translators are on this list. If you can't ... 於 www.icbc.com -

#42.Translators Cafe

TranslatorsCafé.com—Directory of Translators, Interpreters and Translation Agencies! Visitors to TranslatorsCafé.com will find a new and very convenient way ... 於 www.translatorscafe.com -

#43.Translators - Khan Academy

Learn for free about math, art, computer programming, economics, physics, chemistry, biology, medicine, finance, history, and more. Khan Academy is a ... 於 www.khanacademy.org -

#44.Video Subtitle Translator - Rev.com

Give your English videos global reach with Rev subtitle translator. Reach audiences across the globe by adding subtitle translations in 15+ languages to ... 於 www.rev.com -

#45.Enjoy instant voice translator on your phone calls - Palaver

Instant voice translation phone calls · Just pick up the app and dial – let Palaver do the rest. · To enjoy the instant voice translator simply add your contacts ... 於 www.palaverweb.com -

#46.Greek English Translation, Online Text Translator LEXILOGOS

Type a text & select a translator: ... 於 www.lexilogos.com -

#47.Dictionary and online translation between English and over 90 ...

Free online translation from English and other languages into Russian and back. The translator works with words, texts, web pages, and text in photos. 於 translate.yandex.com -

#48.Presidency Machine Translation | Tilde | tilde.com

EU Council Presidency Translator Showcase ... journalists and guests in overcoming these challenges, Tilde has developed the EU Presidency Translator. 於 www.tilde.com -

#49.Reverso | Free translation, dictionary

The world's most advanced translator in French, Spanish, German, Russian, and many more. Enjoy cutting-edge AI-powered translation from Reverso in 15+ ... 於 www.reverso.net -

#50.Translation - Wikipedia

Translators, including early translators of sacred texts, have helped shape the very languages into which they have translated. Because of the laboriousness of ... 於 en.wikipedia.org -

#51.Thing Translator by Dan Motzenbecker

Thing Translator. May 2017 | By Dan Motzenbecker. Take a picture of something to hear how to say it in a different language. Launch experiment Get the code ... 於 experiments.withgoogle.com -

#52.NEW TRANSLATOR - bab.la

Find instant translations in 90+ languages including Spanish, French, German and many more. All our translations are done with pronunciations, definitions ... 於 en.bab.la -

#53.translator | Dart Package - Pub.dev

translator.translate(input, from: 'ru', to: 'en').then(print); // prints Hello. Are you okay? var translation = await translator.translate("Dart is very ... 於 pub.dev -

#54.Translator – Chinese/Japanese/Korean/Hebrew(草莓)|東森 ...

新北市中和區工作職缺|Translator – Chinese/Japanese/Korean/Hebrew(草莓)|東森得易購股份有限公司(東森購物)(電視購物)|面議(經常性薪資4萬含 ... 於 www.1111.com.tw -

#55.Free Online Translator - Preserves your document's layout ...

Free, Online Document Translator which translates office documents (PDF, Word, Excel, PowerPoint, OpenOffice, text) into multiple languages, preserving the ... 於 www.onlinedoctranslator.com -

#56.NAATI - a connected community without language barriers

NAATI is the national standards and certifying body for translators and interpreters in Australia. 於 www.naati.com.au -

#57.Google Translator Toolkit

Google Translator Toolkit is a powerful and easy-to-use editor that helps translators work faster and better. 於 accounts.google.com -

#58.How To Become A Translator - Forbes

For National Translation Month, I interviewed three freelance translators who work in various languages: Jennifer Croft, who translates from ... 於 www.forbes.com -

#59.Voltage translators & level shifters | TI.com - Texas Instruments

Translators by input/output (IO) voltage range. Select a voltage translator from the industry's largest portfolio based off of the voltage levels needed for the ... 於 www.ti.com -

#60.Translator job profile | Prospects.ac.uk

As a translator, you'll convert written material from one or more 'source languages' into the 'target language', making sure that the translated version conveys ... 於 www.prospects.ac.uk -

#61.r/translator - the Reddit community for translation requests

r/translator: r/translator is *the* community for Reddit translation requests. Need something translated? Post here! We will help you translate any … 於 www.reddit.com -

#62.A Day in the Life of a Translator | Phrase

Being a translator can be quite challenging—to say the least. Get a glimpse of what it means to deal with this craft day in and day out. 於 phrase.com -

#63.How To Become A Translator

What skills will I need to be a translator? · A fluent (near-native) understanding of at least one foreign language (source language) · A solid understanding of ... 於 www.iti.org.uk -

#64.Translator: job description - TargetJobs

Translation requires the individual to accurately convey the meaning of written words from one language to another. ... Working as a freelance translator is ... 於 targetjobs.co.uk -

#65.Accurate Spanish-English Translations - SpanishDict

Access millions of accurate translations written by our team of experienced English-Spanish translators. AI-POWERED MACHINE TRANSLATION. Choose from Three ... 於 www.spanishdict.com -

#66.Translate | Participate - TED

TED Translators are volunteers who subtitle TED Talks, and enable the inspiring ideas in them to crisscross languages and borders. 於 www.ted.com -

#67.27-3091.00 - Interpreters and Translators - O*NET

Interpreters and Translators. 27-3091.00. Bright Outlook Updated 2023. Interpret oral or sign language, or translate written text from one language into ... 於 www.onetonline.org -

#68.Google.com.hk

Google. translate.google.com.hk. 请收藏我们的网址. ICP证合字B2-20070004号. 於 translate.google.cn -

#69.Online Translator: Translation

Translation. Free Online Translation for albanian, arabic, bulgarian, catalan, chinese (simp.), chinese (trad.), croatian, czech, danish, dutch, estonian, ... 於 imtranslator.com -

#70.Swift Translator

Define, validate and map any message format. As an easy-to-use, standalone translation solution, Swift Translator easily defines, maps and validates messages ... 於 www.swift.com -

#71.Linguee | Dictionary for German, French, Spanish, and more

English Dictionary and Translation Search with 1000000000 example sentences from human translators. Languages: English, German, French, Spanish, ... 於 www.linguee.com -

#72.'Sorry, Peeps': Translator Bows Out of 'Impossible' Manga ...

Impossible to translate” manga series Cipher Academy drives longtime translator Kumar Sivasubramanian to quit after 13 chapters. 於 slator.com -

#73.Package translator - CTAN

translator – Easy translation of strings in LaTeX. This LaTeX package provides a flexible mechanism for translating individual words into different ... 於 ctan.org -

#74.AI Translator

Introducing the AI Translator extension - the ultimate tool for seamless communication and understanding. With cutting-edge AI technology, ... 於 chrome.google.com -

#75.Language Translator Demo - IBM

IBM Watson Language Translator Demo. Interested in. Watson Language Translator? Get Started on IBM Cloud. Text Document URL. Translate Text. 0/10000. 於 www.ibm.com -

#76.ProZ.com: Freelance translators & Translation companies

Translation service and translation jobs for freelance translators and translation agencies. 於 www.proz.com -

#77.Coursera Global Translator Community - Feed Detail

JOIN COURSERA GLOBAL TRANSLATOR COMMUNITY. Have you ever wondered how Coursera's lecture subtitles are translated? Do you speak one or more foreign ... 於 www.coursera.support -

#78.Translator | Explore careers - National Careers Service

Translators convert the written word from the 'source language' into the 'target language', making sure that the meaning is the same. 於 nationalcareers.service.gov.uk -

#79.Slides Translator - Google Workspace Marketplace

Slides Translator automagically translates, reads aloud, or voice types in many languages in your Slides. Communicate with ease! Now includes Voice Typing ... 於 workspace.google.com -

#80.谷歌翻譯工具箱(Google Translator Toolkit) - VoiceTube

谷歌翻譯工具箱(Google Translator Toolkit) · 139 9. Mei Kuo 發佈於2021 年01 月13 日. 更多分享 分享 · 更多分享 分享 收藏 回報. 影片單字. 篩選條件. 重點單字 ... 於 tw.voicetube.com -

#81.Hire a translator or become a translator - Gengo

Become a Gengo translator and gain access to translation jobs giving you flexible income and the opportunity to improve your skills. Sign up for free. 於 gengo.com -

#82.translator - Yahoo奇摩字典搜尋結果

譯者,譯員,翻譯;翻譯家;翻譯機. Dr.eye 譯典通 · translator · 查看更多. IPA[ˌtrænzˈleɪtə(r)]. 美式. 英式. n. 譯者;翻譯程序. 牛津中文字典. translator. 於 tw.dictionary.yahoo.com -

#83.Language Translator - QuillBot AI

Need a translator fast? You may not know 30 languages, but QuillBot does. Use our AI-powered language translator for free online. 於 quillbot.com -

#84.Discourse and the Translator - 第 18 頁 - Google 圖書結果

For the translator of Text 183 (New English Bible, 1961), however, both of these solutions are inadequate; neither 'a penny' nor 'a denarius' communicates ... 於 books.google.com.tw -

#85.Profile | The translator - European Parliament

Our translators typically have a perfect command of their main language and sound foreign language skills in at least 2 other EU languages, but not necessarily ... 於 www.europarl.europa.eu -

#86.The Interpreter - translator on the App Store

The Interpreter translator free aquires text from your voice or using the keyboard and in real time, translates it and reads it for you in one of the 70 ... 於 apps.apple.com -

#87.English to Spanish Translation - ImTranslator

This translation tool includes online translator, translation dictionary, text-to-speech in a variety of languages, multilingual virtual keyboard, ... 於 imtranslator.net -

#88.PROMT.One Translator - free online dictionary and text ...

online translator from English to German and other languages, translation of words and phrases, dictionary and translation examples in English, German, ... 於 www.online-translator.com -

#89.Google Translate - Apps on Google Play

Text translation: Translate between 108 languages by typing • Tap to Translate: Copy text in any app and tap the Google Translate icon to translate (all ... 於 play.google.com -

#90.How To Become a Translator (Steps, Duties and Salary)

To become a translator, you must master a second language. You may have an advantage if you grew up in a bilingual household, though you can ... 於 www.indeed.com -

#91.translator - Wiktionary

NounEdit · A person who converts speech, text, film, or other material into a different language. · (by extension) One that makes a new version of a source ... 於 en.wiktionary.org -

#92.French ↔ English Translator with EXAMPLES | Collins

Translator. Translate your text for free. Over 30 languages available, including English, French, Spanish, German, Italian, Portuguese, Chinese and Hindi. 於 www.collinsdictionary.com -

#93.The Translator's Dialogue: Giovanni Pontiero

The translator's task is to confront the challenge they offer and transpose their individual worlds as faithfully as possible. 於 books.google.com.tw -

#94.The Translator: Vol 28, No 3 (Current issue)

Explore the current issue of The Translator, Volume 28, Issue 3, 2022. ... Article. Microhistorical research in translator studies: an archival methodology. 於 www.tandfonline.com -

#95.DeepL Translate: The world's most accurate translator

Translate texts & full document files instantly. Accurate translations for individuals and Teams. Millions translate with DeepL every day. 於 www.deepl.com -

#96.Antonio (Translator) | Department for General Assembly and ...

Antonio (Translator). What attracted you to become a language professional at the United Nations? Before joining the United Nations two years ago, ... 於 www.un.org -

#97.Interpreters and Translators : Occupational Outlook Handbook

Translators convert written materials from one language into another language. The translator's goal is for people to read the target language ... 於 www.bls.gov -

#98.Tagalog-English Online Translation and Dictionary - Lingvanex

We apply machine translation technology and Artificial intelligence for a free Tagalog English translator. Translate from English to Tagalog online. Need to ... 於 lingvanex.com